Curious about Actual NetApp Certified Hybrid Cloud Administrator (NS0-304) Exam Questions?

Here are sample NetApp Certified Hybrid Cloud Administrator Professional (NS0-304) Exam questions from real exam. You can get more NetApp Certified Hybrid Cloud Administrator (NS0-304) Exam premium practice questions at TestInsights.

An administrator wants to automatically optimize their scale-out web application in GCP. Which product should the administrator use?

Correct : D

To automatically optimize a scale-out web application in Google Cloud Platform (GCP), an administrator should consider using Elastigroup. This tool, offered by Spot by NetApp (formerly Spotinst), is designed to enhance cloud resource utilization by automatically scaling compute resources based on workload demands. Here's why Elastigroup is suitable:

Automatic Scaling: Elastigroup dynamically manages your compute resources, scaling them up or down based on the application demands. This ensures that your application always has the right amount of resources without over-provisioning.

Cost Optimization: By intelligently leveraging spot instances along with on-demand and reserved instances, Elastigroup reduces costs without compromising application availability or performance.

Integration with GCP: Elastigroup seamlessly integrates with Google Cloud, making it straightforward to manage scaling policies directly within the cloud environment.

Elastigroup's capabilities make it an excellent choice for optimizing scale-out applications in cloud environments, particularly for managing the balance between performance, cost, and availability.

For more information on using Elastigroup in GCP, visit the Spot by NetApp website or access their documentation directly: Spot by NetApp - Elastigroup.

Start a Discussions

Which networking component must be configured to provision volumes from Cloud Volume Service in Google Cloud?

Correct : C

When provisioning volumes from Cloud Volume Service in Google Cloud, configuring VPC Peering is essential. This networking component allows your Google Cloud Virtual Private Cloud (VPC) to communicate seamlessly with the VPC used by Cloud Volume Service. Here's the importance of this setup:

Direct Network Connection: VPC Peering facilitates a direct network connection between two VPCs, which can be within the same Google Cloud project or across different projects. This is crucial for ensuring low-latency and secure access to the Cloud Volume Service.

Resource Accessibility: With VPC Peering, compute instances within your VPC can access volumes provisioned by Cloud Volume Service as if they were within the same network, simplifying configuration and integration processes.

Security and Performance: This configuration helps maintain strong security postures while ensuring optimal performance due to reduced network hops and potential bottlenecks.

To configure VPC Peering for Cloud Volume Service in Google Cloud, you should follow the specific guidelines provided in Google Cloud's documentation or the setup instructions available in the Cloud Volume Service portal: Google Cloud VPC Peering Documentation.

Start a Discussions

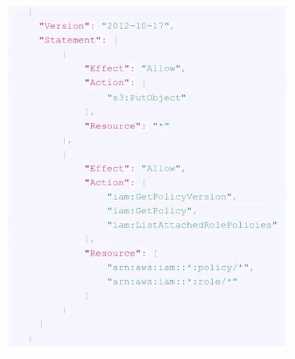

Refer to the exhibit.

An administrator needs to review the IAM role being provisioned for Cloud Data Sense in order to scan S3 buckets. Which two permissions are missing? (Choose two.)

Correct : C, E

For Cloud Data Sense to effectively scan S3 buckets, it requires permissions to list and get objects within the buckets. From the IAM policy provided in the exhibit, the permissions currently include s3:PutObject for object creation and a series of IAM-related permissions such as iam:GetPolicyVersion, iam:GetPolicy, and iam:ListAttachedRolePolicies. However, for scanning purposes, Data Sense needs to read and list the objects in the buckets. Therefore, the missing permissions are:

s3:List*: This permission allows the listing of all objects within the S3 buckets, which is necessary to scan and index the contents.

s3:Get*: This grants the ability to retrieve or read the content of the objects within the S3 buckets, which is essential for scanning the data within them.

These permissions ensure that Cloud Data Sense can access the metadata and contents of objects within S3 to perform its functionality.

Start a Discussions

An administrator wants to protect Kubernetes-based applications across both on-premises and the cloud. The backup must be application aware and protect all components and data for the application. The administrator wants to use SnapMirror for disaster recovery.

Which product should the administrator use?

Correct : B

Astra Control Service is the appropriate NetApp product for protecting Kubernetes-based applications across both on-premises and cloud environments. Astra Control Service is designed to provide application-aware data management, which means it understands the structure and dependencies of Kubernetes applications and can manage them holistically. This includes backup and recovery, application cloning, and dynamic scaling.

While SnapMirror could be used for disaster recovery by replicating data at the storage layer, it does not inherently understand or manage the Kubernetes application layer directly. SnapCenter is primarily focused on traditional data management for enterprise applications on NetApp storage and does not cater specifically to Kubernetes environments. Cloud Backup Service is for backup to the cloud and also does not provide the Kubernetes application awareness required in this scenario.

Thus, Astra Control Service, which integrates deeply with Kubernetes, allows administrators to manage, protect, and move containerized applications and their data across multiple environments, making it the best fit for the described requirements. For detailed information on Astra Control Service's capabilities with Kubernetes applications, refer to the official NetApp Astra Control Service documentation.

Start a Discussions

How many private IP addresses are required for an HA CVO configuration in AWS using multiple Availability Zones?

Correct : B

In an HA (High Availability) Cloud Volumes ONTAP (CVO) configuration within AWS that spans multiple Availability Zones, a total of 13 private IP addresses are required. This includes IP addresses for various components such as management interfaces, data LIFs (Logical Interfaces), and intercluster LIFs for both nodes in the HA pair. The distribution of these IP addresses ensures redundancy and failover capabilities across the Availability Zones, which is essential for maintaining high availability and resilience of the storage environment.

NetApp Hybrid Cloud Administrator Course Material (HA Configuration in AWS module)

Start a Discussions

Total 65 questions